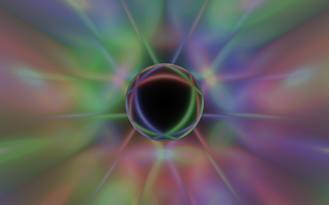

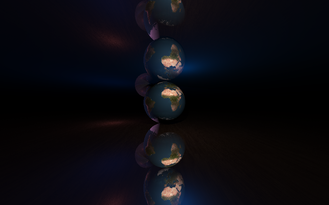

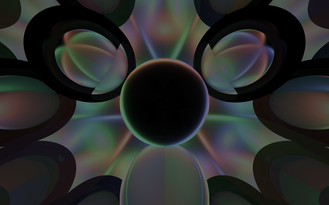

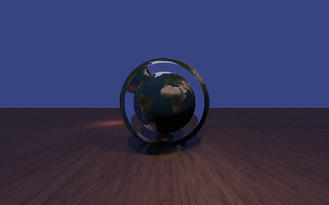

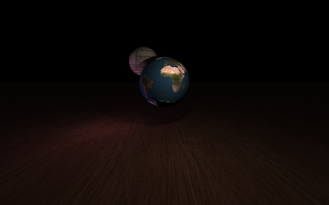

This is a small learning project I did where I rewrote my CPU-based raytracer as a shader in order to learn GLSL. The end result is capable of rendering spheres and planes, with both directional and spherical lights, and reflective and refractive surfaces, all in real-time.

Introduction

I was inspired to do this project after reading this great series of blog posts by Brook Heisler titled 'Writing a Raytracer in Rust', which you can check out here.

As it turns out, creating a raytracer is not nearly as hard as it sounds. After creating my own CPU-based raytracer in Rust I was able to render some pretty cool looking images.

The problem was that images could take a while to render (especially at higher resolutions). Since raytracers benefit massively from parallelism, this is a perfect use case for a GPU (and I'd also been looking for an excuse to learn shader programming). I therefore decided to rewrite the raytracer as a shader to get a massive performance speedup, and perhaps even get it to run in real-time.

Creating the Shader

First, near the top of the file, I declared two descriptors:

layout(set = 0, binding = 0, rgba8) uniform writeonly image2D img;

layout(set = 0, binding = 1) uniform readonly Input {

int width;

int height;

vec3 view_position;

float view_angle;

float time;

};

A descriptor is a way for shaders to access resources. The first is the image the output will be drawn to, and the next is a buffer containing input data needed for the raytracer.

Towards the end of the file, I have a main function that looks like this:

void main() {

// ...

// Create and trace the ray

Ray ray = createPrimeRay();

vec3 col = radience(ray);

// Output image

vec4 to_write = vec4(col, 1.0);

imageStore(img, ivec2(gl_GlobalInvocationID.xy), to_write);

}

This function is run for each pixel of the image. The createPrimeRay function defines the ray at the correct position and angle, taking into account the position, angle, and field of view of the camera.

The radience function then traces this ray, returning the colour to be drawn to the pixel. The next two lines then write the colour to the output image. gl_GlobalInvocationID is part of GLSL, and is a vector containing the coordinates of the current pixel.

One of the biggest differences between normal CPU programming and shader programming is the lack of recursion. This was a bit of a problem since raytracing is a recursive algorithm where each ray, upon intersecting an object, would cast a new ray from that object to simulate light rays 'bouncing'. I got around this by simply defining a 'recursive depth' constant which controls the number of iterations of a for loop within which the algorithm is run.

Running the Shader with Vulkan

To run the shader and output the image to the screen I used the Vulkan API. Specifically I used a rust wrapper for the API called Vulkano.

For creating and rendering the window Vulkan uses whats called a swap chain, which is essentially a queue of images such that the image at the front is drawn to the window while the others (the backbuffers) are still being drawn to by the shader. This prevents partially drawn frames from being displayed.

After loading the shader and getting device information I created the window and swap chain:

// Creating a window

let surface = WindowBuilder::new()

.with_resizable(false)

.with_inner_size(Size::Physical(PhysicalSize { width, height }))

.build_vk_surface(&events_loop, instance.clone())

.unwrap();

// Creating a swapchain

let caps = surface

.capabilities(physical)

.expect("failed to get surface capabilities");

let dimensions = caps.current_extent.unwrap_or([width, height]);

let alpha = caps.supported_composite_alpha.iter().next().unwrap();

let format = caps.supported_formats[0].0;

let (swapchain, images) = Swapchain::new(

device.clone(),

surface.clone(),

caps.min_image_count,

format,

dimensions,

1,

ImageUsage {

color_attachment: true,

transfer_destination: true,

..ImageUsage::none()

},

&queue,

SurfaceTransform::Identity,

alpha,

PresentMode::Fifo,

FullscreenExclusive::Default,

true,

ColorSpace::SrgbNonLinear,

)

.expect("failed to create swapchain");

Then, in the events loop which runs every frame, I create the buffer containing shader inputs and bind it to the descriptor set so it's accessible by the shader:

// The parameter `_dummy0` is required to make sure the bytes are aligned in memory, which Vulkan requires.

let params_buffer = CpuAccessibleBuffer::from_data(

device.clone(),

BufferUsage::all(),

false,

cs::ty::Input {

width: width as i32,

height: height as i32,

view_position,

view_angle,

time: now.elapsed().as_secs_f32(),

_dummy0: [0, 0, 0, 0, 0, 0, 0, 0],

},

)

.expect("failed to create params buffer");

let layout = compute_pipeline.layout().descriptor_set_layout(0).unwrap();

let set = Arc::new(

PersistentDescriptorSet::start(layout.clone())

.add_image(image.clone())

.unwrap()

.add_buffer(params_buffer)

.unwrap()

.build()

.unwrap(),

);

Then all that's left is to execute the shader and copy the image to the swap chain.

The full source code for this project can be found on github: here